Mystic Mike’s predictions: What does 2026 hold for paid media?

By Mike Sharp, Operations Director at Launch That time of year is here. The time when I confidently predict the…

Read time:

11 mins

Category:

A/B testing google ads can optimise ad performance and maximise ROI. In this post we will outline how to set up and run successful google ads A/B testing. At Launch, we drive powerful results and as Google Premier Partners, we get exclusive insights and the latest features to boost your ads even more!

So, let’s look at how to A/B test your Google Ads and optimise ad performance.

A/B testing, also known as ‘split testing’ is a method used to compare two versions of an advert. Small changes in single variables, such as headings, descriptions and visuals are made and analysed to determine which version drives better results. Results are measured using key metrics such as click-through rates and conversions.

A/B testing Google Ads offers several key benefits that can enhance your advertising strategy. These include:

Here is a simple, step-by-step guide for A/B testing Google Ads.

Variants include headlines, visuals, ad copy, product descriptions, audience targeting, CTAs, and bid amounts. When A/B testing, it is important to only test one variable at a time. Small changes can have a large impact on results, and by only changing one variable at a time, you can be sure which change is driving the results. You can jump to our Google Ads Test Ideas section to learn more about how to improve each of these variables.

Google recommends giving the A/B testing experiment at least two weeks to start working. However, if three conversion cycles are longer than two weeks, three conversion cycles are recommended. You can use the bid strategy report to find out how long it takes your customers to convert. After two weeks, or three conversion cycles it is recommended to let the experiment run for 30 days without any interruptions.

Clearly define your success metrics before running a split test. Key metrics may include click-through rate (CTR), conversion rate, cost per click (CPC), cost per conversion (CPA), return on ad spend (ROAS) and bounce rates. Make sure you record the current metrics prior to the A/B test so that you can compare figures and measure the difference in performance results.

There are three ways to run Google Ads A/B Testing. We would recommend using Google Experiments within your Google Ads dashboard. Google experiments allow you to pick up to two goals to measure and this is how you can measure success for an experiment. These metrics are also in the campaign report. However, you can also test manually or use third-party tools. Here is an overview of each method.

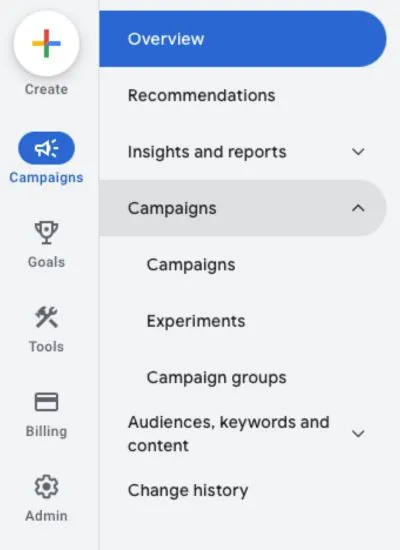

1. Navigate to the ‘Campaigns’ icon in your Google Ads account.

2. Click the icon and select the drop-down menu on the left-hand side that says ‘campaigns,’ followed by ‘experiments.’

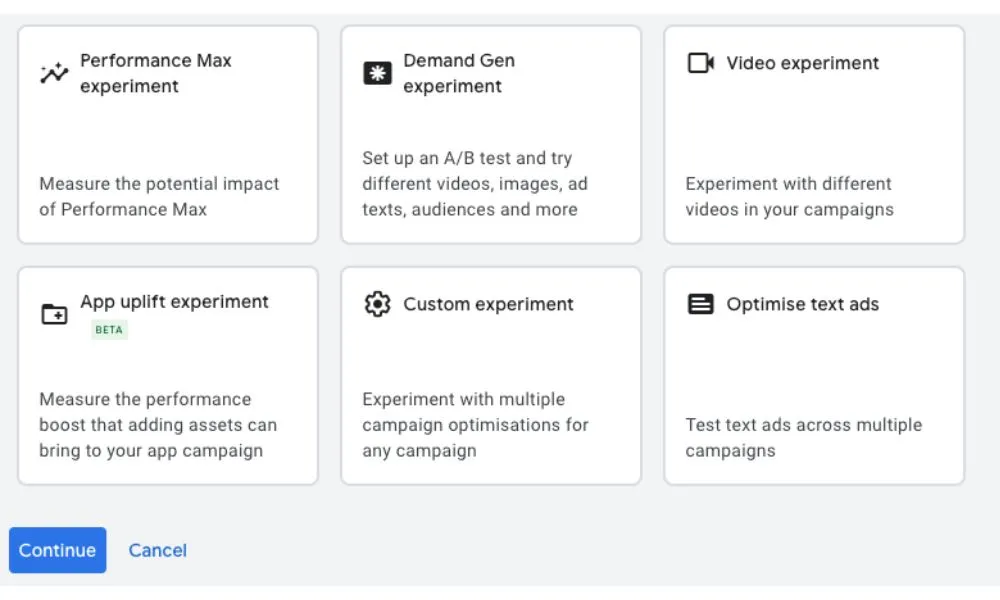

3. To create a new A/B test click the blue and white plus button (or the ‘create an experiment button).

4. Choose from the following options: performance max experiment, demand gen experiment, video experiment, app uplift experiment, customise experiment or optimise text for ads. For this example, we’re going to optimise text ads.

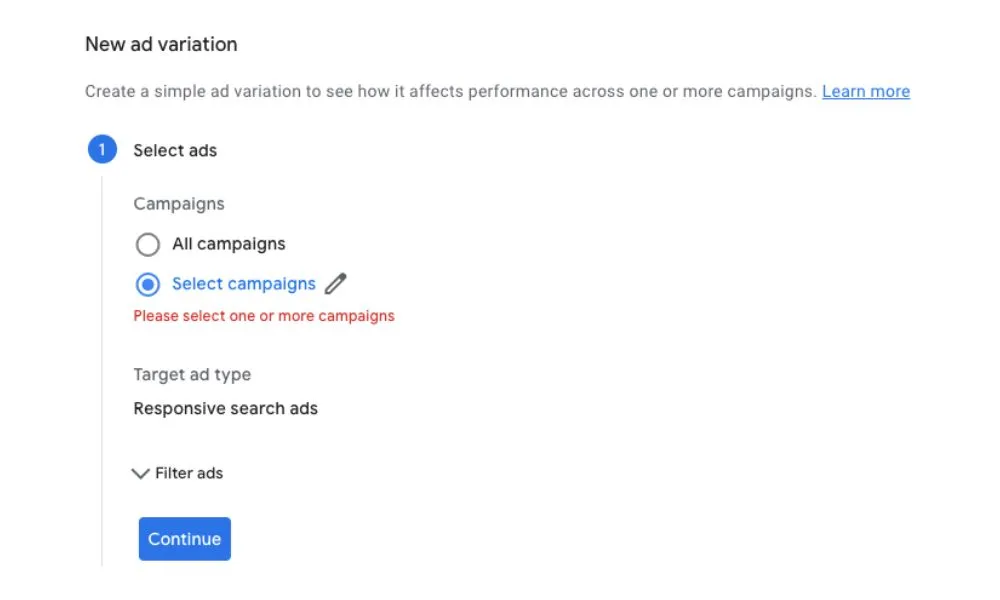

5. Google will ask you to create a new ad variation. You can choose to run the variation across all of your campaigns or choose a specific one.

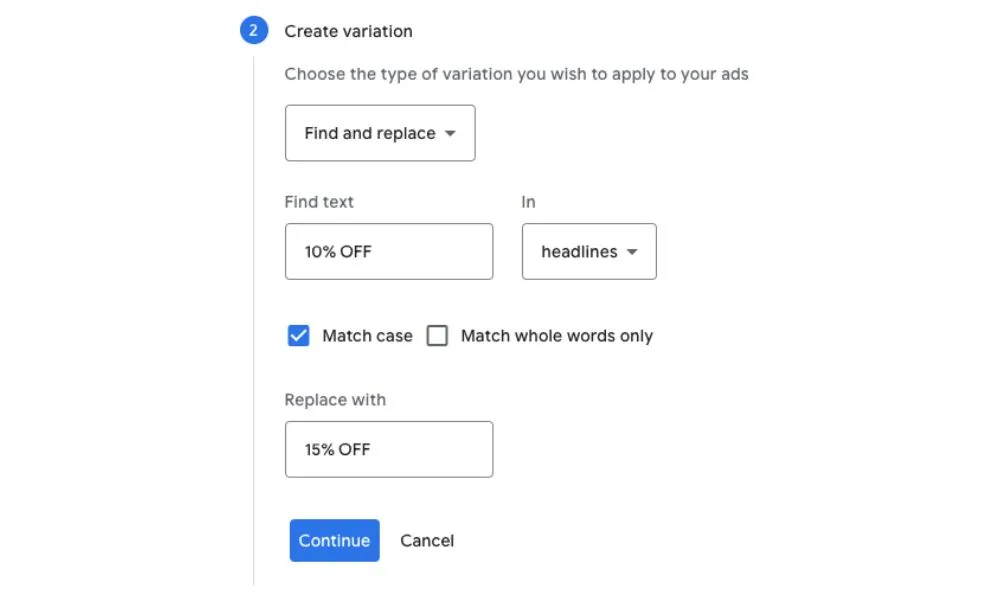

6. Next you’ll choose what you want to test. E.g., in this example we are going to test ‘10% OFF’ vs ‘15% OFF’

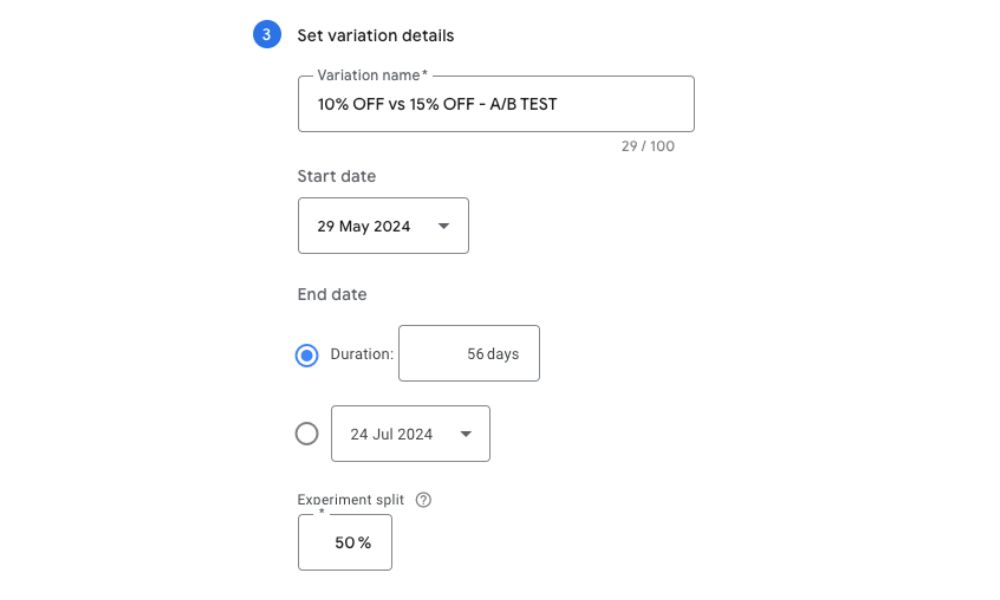

7. Then you’ll name your variation, select a start date and select the experiment split, which determines how often your ad will be served to users. We have selected 50% to ensure our results are unbiased.

8. Once submitted, you’ll see it appear under your experiments tab.

Some people prefer to A/B test manually, as it allows for full control and granular insights. Similarly, objectives must be first defined, and a variation must be created with an equal split. When manually testing, both versions of the ad must be launched at the same time to control external factors. A/B split testing calculators can be used to determine statistical significance between the control and variation of the ad.

It is also possible to A/B test Google Ads with third-party tools such as A/B Smartly, Adobe Target and Eppo. These tools have powerful features but may add layers of complexity and take additional time to learn and navigate. These may also involve extra subscription fees, and it is worth noting that not all third-party apps integrate seamlessly with Google Ads.

When analysing A/B test results, it is important to account for conversion delay. When evaluating performance, exclude the first two weeks (or three conversion cycles) from your results and any recent days where less than 90% of conversions have been reported. After excluding these factors, compare performance between the original ad and the ad variation for key metrics such as click-through rate (CTR), conversion rate, cost per click (CPC), cost per conversion (CPA), return on ad spend (ROAS) and bounce rates.

If you discover significant performance results, implement these changes to the ad. If not, create a new experiment and select a different variable to adjust and run. It is important to continuously monitor ads, notice trends and implement and improve when necessary.

Here is a list of variables to select and test while running Google Ads, as well as ways to improve each variable’s performance.

Improvements to make to headlines when A/B testing:

Changes to make to visuals when A/B testing ads:

Note, that Google Search Ads do not use images, so choose a text-based variation for these.

Improvements to make to ad copy when A/B testing ads:

Ways to improve product descriptions when split testing Google Ads:

Changes to make to audience targeting with Google Ads A/B testing:

Ways to improve your Google Ad call to action:

Improvements to make to your bid amount:

In 2020, during lockdown we tailored ad copy in Aria Resorts campaigns to push a ‘flexi-booking’ message whilst also expanding remarketing campaigns. Working closely with Aria’s internal marketing team, we used past data to find ‘similar audiences’, and with this data-driven approach they received a 2833% return on ad spend!

Argyll was using paid search but found it ineffective. We supported Argll in getting specific with keyword targeting, ensuring higher-quality leads and using their budget more efficiently. We also migrated top-performing search campaigns onto smart bidding strategies to keep to the target cost per lead. They saw a 374% increase in leads and a 69% decrease in cost per lead – a win-win situation.

Using A/B testing for Google Ads can significantly impact results. It is possible to run A/B tests with Google Experiments manually and using third-party apps. However, we recommend using Google Experiments and hiring an expert to manage and optimise campaigns carefully; it is an ongoing method that requires careful consideration, a close eye, and a wealth of expertise.

At Launch we are experienced at boosting website growth for clients with data-driven experimentation programmes. We use the power of conversation rate optimisation (CRO) to grow revenue and increase return on investment (ROI). We are passionate about breathing new life into performance campaigns with fresh thinking and the latest technological developments. Contact us today to find out more about our CRO services and experimentation programmes.

It is free to set up a Google Ads account; however, you will be charged once your campaigns are set up and running. In 2023, the average cost per click was £0.95. Many open-source, free third-party apps exist; however, these may have limited features or require a subscription to access all features.

An example of AB testing for Google Ads could be testing two different ad headlines for a company that sells hot tubs to see which one generates more clicks and conversions. For instance, the existing campaign may use the headline ‘Buy Luxury Hot Tubs’, and the company could A/B test this against a variation that states ‘Experience Luxury Hot Tubs.’ If the variation produced improved results, it would be in their interest to update the ad to the variation.

AB testing can be highly effective in improving conversion rates. By comparing different versions, businesses can identify the most impactful changes and make data-driven decisions. It allows for continuous optimisation and refinement, leading to better user experiences and increased ROI.

Several tools are used for AB testing including Google Experiments, A/B Smartly, Eppo and Adobe Target. These tools allow businesses to set up variations, track user behaviour and measure the impact of different elements on conversion rates.

By Mike Sharp, Operations Director at Launch That time of year is here. The time when I confidently predict the…

Read Launch's media roundup - the latest developments in performance marketing, including the state of market research and the WARC…

Rich Kimber has joined our team as Head of Growth. Rich will be responsible for developing partnerships with other specialist…